If you're a website owner or a digital marketer, it's essential to understand how search engine crawlers interact with your site. One critical aspect of managing crawler access is the robots.txt file - a simple text file that communicates rules for web robots on what they can and cannot crawl on your site. In this guide, we'll dive deep into the world of robots.txt, exploring its history, best practices, and advanced topics to help you optimize your website's crawler access and enhance overall SEO performance.

Understanding Robots.txt

History and Evolution of Robots.txt

Origin and Purpose

The concept of robots.txt emerged in the mid-1990s when search engines were becoming increasingly popular, and website owners needed a way to control how crawlers accessed their content. The original purpose was to prevent web spiders from indexing specific pages or sections of a site, thus improving privacy and performance.

Changes and Updates Over Time

Over the years, robots.txt has evolved as search engines have refined their crawling algorithms and introduced new features. For instance, Google introduced the crawl-delay directive in 2006 but later deprecated it in favor of more advanced methods for managing crawler behavior.

Basics of Robots.txt

File Location and Syntax

A standard robots.txt file should be placed in the root directory of a website, i.e., http://example.com/robots.txt. The syntax is simple: each directive appears on a separate line, with two main components - the User-agent and Disallow directives.

User-Agent and Disallow Directives

The User-agent directive specifies which web robot or crawler the rules apply to, while the Disallow directive indicates which pages or sections of the site should be blocked. For example:

User-agent: Googlebot

Disallow: /private/

This rule will prevent Googlebot from accessing the /private/ directory on the website.

Comments and Wildcards

Robots.txt supports comments, which are indicated by a # symbol at the beginning of the line. Additionally, wildcards (*) can be used to create general rules for multiple user-agents or paths:

User-agent: *

Disallow: /*.pdf$

This rule will block all crawlers from accessing PDF files on the entire website.

Common Misconceptions About Robots.txt

- It's a security measure:

Robots.txtis not a security feature but rather a guideline for web crawlers. It does not prevent unauthorized access or protect sensitive information from being accessed by malicious actors. - It guarantees crawler compliance: While most well-behaved crawlers respect

robots.txtrules, there's no guarantee that all crawlers will comply. Malicious bots and poorly designed crawlers may ignore these directives. - It affects SEO: Although

robots.txtcan influence how search engines crawl and index your site, it does not directly impact SEO. However, incorrect or overly restrictive rules can negatively affect your site's visibility in search engine results.

Best Practices for Creating a Robots.txt File

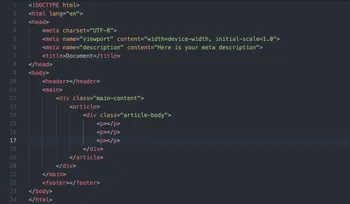

Defining the Structure and Format

File Naming Conventions

Stick to the standard file naming convention of robots.txt. Avoid using other names or extensions, as this may cause confusion and lead to crawlers ignoring your directives.

Organizing Directives

Organize your robots.txt rules in a clear and logical manner. Group user-agents together, and separate sections with comments for easy readability:

# Googlebot directives

User-agent: Googlebot

Disallow: /private/

# Bingbot directives

User-agent: Bingbot

Disallow: /test/

Identifying Pages and Resources to Block

Duplicate Content

Prevent crawlers from indexing duplicate content by blocking access to pages that have been canonicalized or consolidated.

Sensitive Information

Protect sensitive information, such as login pages or personal data, by disallowing crawler access:

User-agent: *

Disallow: /admin/

Disallow: /users/

Low-Value or Thin Content

Exclude low-value or thin content, such as search results pages or archives, to improve overall site quality and relevance in search engine results.

Allowing Access to Specific Pages and Resources

High-Value Content

Ensure that high-value content, such as blog posts or product pages, is easily accessible to crawlers:

User-agent: *

Allow: /blog/

Allow: /products/

XML Sitemaps

Include XML sitemap locations in your robots.txt file to help search engines discover and index all of your important pages:

Sitemap: https://www.example.com/sitemap.xml

Sitemap: https://www.example.com/post-sitemap.xml

Testing and Validating the Robots.txt File

Using Google's Robots.txt Tester

Google's Search Console offers a built-in robots.txt tester, allowing you to test your directives against specific user-agents and validate any potential issues:

- Navigate to the "Robots.txt Tester" tool in Google Search Console.

- Enter your

robots.txtrules in the text area. - Test your directives using various user-agents, such as Googlebot or Bingbot.

- Review any errors or warnings and adjust your rules accordingly.

Manually Testing with a Crawler

Manually test your robots.txt rules by running a crawler, such as Screaming Frog SEO Spider, against your website to ensure that the directives are being correctly applied:

- Configure the crawler to use specific user-agents or settings.

- Begin the crawl and monitor the results, checking for any unexpected access or blocked pages.

- Adjust your

robots.txtrules as needed based on the crawl results.

Advanced Topics in Robots.txt

Crawl-Delay Directive

Definition and Purpose

The crawl-delay directive is used to specify a delay between requests made by a specific user-agent. This can help manage server load and prevent overloading:

User-agent: Bingbot

Crawl-delay: 5

Benefits and Drawbacks

While the crawl-delay directive can reduce server strain, it may also limit the speed at which search engines crawl and index your site. It's essential to balance these factors based on your specific needs and resources.

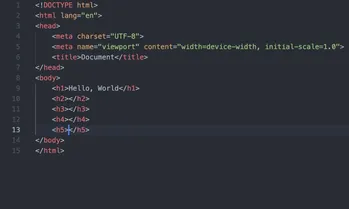

Robots Meta Tags

Overview and Syntax

Robots meta tags are HTML tags placed in the <head> section of a webpage, specifying crawler access rules for that particular page:

<meta name="robots" content="noindex, nofollow">

This example directive tells all crawlers not to index or follow links on the page.

When to Use Robots Meta Tags Instead of Robots.txt

While robots.txt is useful for managing site-wide rules, robots meta tags are better suited for individual pages or sections:

- Pages with sensitive information that should not be indexed, even if included in a sitemap.

- Pages with content that varies based on user behavior, such as filtered search results.

Robots Exclusion Standard (REP)

Overview and History

The Robots Exclusion Protocol (REP) is an industry standard for managing crawler access to websites. It includes robots.txt, robots meta tags, and the X-Robots-Tag HTTP header. REP was initially introduced in 1994 and has since evolved with updates from major search engines:

- Google's Robots Exclusion Protocol documentation (2019)

- Bing's Robots.txt Specifications (2015)

Differences From Robots.txt

REP encompasses more than just robots.txt, including other methods for controlling crawler access and behavior:

- Robots meta tags, as discussed earlier

- X-Robots-Tag HTTP header, which allows you to apply directives at the server level for specific resources

Mobile-Specific Robots.txt

Importance in Mobile-First Indexing

With Google's shift towards mobile-first indexing, it's crucial to manage crawler access on both desktop and mobile versions of your site:

- Create a separate

robots.txtfile for the mobile version, if applicable (e.g.,http://m.example.com/robots.txt). - Ensure that mobile-specific rules are in place to guide crawlers accessing your mobile content.

Best Practices for Creating a Mobile-Specific Robots.txt File

When creating a mobile-specific robots.txt, consider the following best practices:

- Block or allow specific pages and resources based on their relevance and value to mobile users.

- Test your mobile

robots.txtfile using Google's Search Console Robots.txt Tester, specifying the "Googlebot Smartphone" user-agent.

FAQ Section

Q: What is the purpose of a robots.txt file?

A: The robots.txt file is a simple text file used to communicate rules for web robots on what they can and cannot crawl on your site. Its primary purpose is to prevent search engine crawlers from accessing specific pages or sections, improving privacy and performance.

Q: Where should I place the robots.txt file?

A: The robots.txt file should be placed in the root directory of a website, i.e., http://example.com/robots.txt.

Q: How do I create a robots.txt file?

A: You can create a robots.txt file using any text editor, such as Notepad or Sublime Text. Simply define your directives according to the user-agent and disallow syntax and save the file as "robots.txt" in your website's root directory.

Q: Can I use wildcards in a robots.txt file?

A: Yes, you can use wildcards (*) in a robots.txt file to create general rules for multiple user-agents or paths. For example, Disallow: /*.pdf$ will block all crawlers from accessing PDF files on the entire website.

Q: Does robots.txt affect SEO?

A: While robots.txt can influence how search engines crawl and index your site, it does not directly impact SEO. However, incorrect or overly restrictive rules can negatively affect your site's visibility in search engine results.

Q: How do I test my robots.txt file?

A: You can test your robots.txt file using Google's Robots.txt Tester in the Search Console or by running a crawler, such as Screaming Frog SEO Spider, against your website to ensure that the directives are being correctly applied.

Q: What is the difference between robots.txt and the robots meta tag?

A: Robots.txt is used for site-wide rules, while robots meta tags are better suited for individual pages or sections. Robots meta tags are placed in the <head> section of a webpage, specifying crawler access rules for that particular page.

Q: What is the Crawl-delay directive in robots.txt?

A: The Crawl-delay directive is used to specify a delay between requests made by a specific user-agent. This can help manage server load and prevent overloading. However, its use is discouraged as it may limit the speed at which search engines crawl and index your site.

Q: What is the Robots Exclusion Protocol (REP)?

A: The Robots Exclusion Protocol (REP) is an industry standard for managing crawler access to websites, encompassing robots.txt, robots meta tags, and the X-Robots-Tag HTTP header. REP was introduced in 1994 and has since evolved with updates from major search engines.

Q: What is a mobile-specific robots.txt file?

A: A mobile-specific robots.txt file is used to manage crawler access on the mobile version of your site, ensuring that mobile-specific rules are in place to guide crawlers accessing your mobile content.

Conclusion

In this guide, we have explored the world of robots.txt, covering its history, best practices, and advanced topics. By understanding how to create, manage, and optimize your robots.txt file, you can improve crawler behavior, enhance overall site performance, and positively impact SEO. Continue learning and improving your robots.txt skills, and don't forget to stay up-to-date with the latest developments in search engine crawling and indexing.